AI Glossary (A–Z)

A

- Accuracy: The proportion of correct predictions made by a model compared to total predictions.

- Algorithm: A step-by-step computational procedure used to solve problems or perform data processing tasks.

- Artificial Intelligence (AI): The branch of computer science that enables machines to mimic human intelligence and behavior.

- Artificial Neural Network (ANN): A network of interconnected nodes (neurons) that simulate how the human brain processes information.

- Agent: An autonomous entity that perceives its environment and acts to achieve defined goals.

B

- Backpropagation: The learning process used in neural networks to adjust weights by minimizing prediction errors.

- Bias (AI): Systematic error that causes unfair or skewed model predictions due to flawed data or design.

- Big Data: Extremely large datasets that require advanced computational techniques for processing and analysis.

- Bayesian Network: A probabilistic graphical model that represents relationships among variables using conditional dependencies.

- Batch Processing: Executing data operations in groups rather than one at a time for efficiency.

C

- Chatbot: A conversational AI system that interacts with users through text or voice.

- Classification: A supervised learning method used to assign data into predefined categories.

- Clustering: An unsupervised learning technique for grouping similar data points without predefined labels.

- Computer Vision: A field of AI that allows computers to interpret and understand visual data such as images or videos.

- Convolutional Neural Network (CNN): A deep learning model designed for analyzing visual data through convolutional layers.

D

- Data Augmentation: Techniques for increasing the diversity of training data by applying transformations like rotation or scaling.

- Data Mining: Discovering hidden patterns, correlations, and insights from large datasets.

- Dataset: A structured collection of data used to train and evaluate models.

- Decision Tree: A flowchart-like model that makes decisions based on feature-based questions.

- Deep Learning: A subset of machine learning that uses multi-layer neural networks to learn complex patterns from data.

E

- Edge AI: Running AI algorithms locally on hardware devices rather than in the cloud to reduce latency.

- Embedding: A way to represent words, images, or data in numerical vector form for machine learning models.

- Epoch: One complete pass through the entire training dataset during model training.

- Exploratory Data Analysis (EDA): The process of analyzing datasets to summarize their main characteristics visually and statistically.

- Expert System: An AI program that mimics the decision-making ability of human experts.

F

- Feature Engineering: Creating or modifying input variables to improve model performance.

- Fine-Tuning: Adjusting a pre-trained model on a smaller, specific dataset to specialize its performance.

- Federated Learning: A distributed AI approach where models are trained across decentralized devices without sharing raw data.

- Few-Shot Learning: Training AI models to generalize well from very few examples.

- Framework: A software platform for building and training AI models (e.g., TensorFlow, PyTorch).

G

- Generative AI: AI systems capable of creating new content such as text, images, code, or music.

- Gradient Descent: An optimization algorithm used to minimize loss functions by adjusting model parameters.

- Generative Adversarial Network (GAN): A deep learning framework with two competing networks that create realistic synthetic data.

- Graph Neural Network (GNN): A model designed to process graph-structured data.

- Ground Truth: The actual, verified data used to evaluate model accuracy.

H

- Heuristic: A rule-of-thumb approach used to solve problems more quickly when exact solutions are impractical.

- Hyperparameter: External parameters that control model learning, such as learning rate or batch size.

- Hybrid AI: Systems that combine symbolic reasoning with machine learning for more explainable intelligence.

- Human-in-the-Loop (HITL): A system where humans provide feedback during AI training or decision-making.

I

- Inference: The process of making predictions using a trained AI model.

- Interpretability: The degree to which a human can understand an AI model’s decision-making process.

- Image Recognition: The ability of AI systems to identify objects or features within images.

- Imbalanced Data: When classes in a dataset are unevenly represented, leading to biased models.

- Intelligent Agent: A program capable of perceiving its environment and taking action to achieve goals autonomously.

J

- Jupyter Notebook: An interactive development tool commonly used for data analysis and AI experimentation.

- Joint Probability: The likelihood of two or more events occurring together.

- JSON: A data format often used to exchange structured data in AI applications.

K

- K-Means Clustering: A method for dividing data into K groups based on feature similarity.

- Knowledge Graph: A structured network of real-world entities and their relationships.

- K-Nearest Neighbors (KNN): A simple algorithm that classifies data points based on nearby neighbors.

- Kernel Function: A function used in SVMs to map data into higher dimensions.

L

- Latent Space: The abstract representation of compressed data features learned by a model.

- Large Language Model (LLM): AI trained on vast text data to generate and understand natural language.

- Learning Rate: A hyperparameter that determines how quickly a model updates.

- Linear Regression: A technique modeling the relationship between dependent and independent variables.

- Loss Function: A metric for how well predictions match actual outcomes.

M

- Machine Learning (ML): A subset of AI where systems learn from data.

- Model Training: The process of teaching an algorithm to recognize patterns.

- Multimodal AI: AI that integrates multiple data types.

- Metric: A measure of model performance.

- Meta-Learning: “Learning to learn,” enabling fast adaptation.

N

- Natural Language Processing (NLP): AI focused on understanding human language.

- Neural Network: A system of interconnected nodes that detect patterns.

- Normalization: Adjusting input data to a standard scale.

- Noise: Irrelevant or random data.

- Node: A computational unit in a neural network.

O

- Optimization: Adjusting parameters to minimize errors.

- Overfitting: When a model learns noise instead of general patterns.

- Object Detection: Identifying and locating objects in images/videos.

- Ontology: A framework defining entities and relationships.

- Outlier: A data point significantly different from others.

P

- Precision: The ratio of true positives to all predicted positives.

- Predictive Modeling: Using algorithms to predict outcomes.

- Prompt Engineering: Designing inputs that guide AI models.

- Preprocessing: Cleaning and transforming raw data.

- Parameter: Internal variables learned during training.

Q

- Quantization: Reducing model size with lower-precision values.

- Q-Learning: A reinforcement learning algorithm.

- Quality Assurance (AI): Ensuring models meet performance and fairness standards.

R

- Reinforcement Learning (RL): Learning through trial, error, and rewards.

- Regularization: Techniques to prevent overfitting.

- Regression: Predicting continuous values.

- Retrieval-Augmented Generation (RAG): Combining retrieval with generative AI.

- Robotics: Designing autonomous machines.

S

- Scalability: Ability to handle more data or users.

- Semi-Supervised Learning: Training with labeled + unlabeled data.

- Sentiment Analysis: Determining emotional tone in text.

- Supervised Learning: Training with labeled pairs.

- Support Vector Machine (SVM): A powerful classification/regression algorithm.

T

- Tensor: A multidimensional array used in deep learning.

- Tokenization: Splitting text into tokens.

- Transfer Learning: Applying learned knowledge to new tasks.

- Transformer: Architecture that revolutionized NLP.

- Training Data: Data used to teach models.

U

- Unsupervised Learning: Finding patterns in unlabeled data.

- Underfitting: When a model is too simple.

- Unstructured Data: Data without a fixed format.

- Upsampling: Increasing data size or resolution.

- Utility Function: Mathematical representation of goals.

V

- Validation Set: Data used for fine-tuning and evaluation.

- Vector Database: A system for storing/querying embeddings.

- Variance: Variability of model predictions.

- Vision Transformer (ViT): Transformer architecture for images.

- Voice Recognition: Identifying a speaker from audio.

W

- Weight: A model parameter showing feature importance.

- Word Embedding: Vector representation of words.

- Weak Supervision: Training with imperfect labels.

- Workflow Automation: Using AI to streamline processes.

- White Box Model: A transparent, explainable model.

X

- XAI (Explainable AI): AI designed for interpretability.

- XML: A format for structuring data.

- XGBoost: A high-performance gradient boosting algorithm.

Y

- Yield Prediction: Forecasting production outcomes.

- YOLO: A real-time object detection algorithm.

- Yottabyte: A unit of digital information equal to one septillion bytes.

Z

- Zero-Shot Learning: Recognizing tasks without prior examples.

- Z-Score Normalization: A method for standardizing data.

- Zone of Proximal Development: The range where AI improves with minimal guidance.

Summary: Understanding Artificial Intelligence

1. Introduction

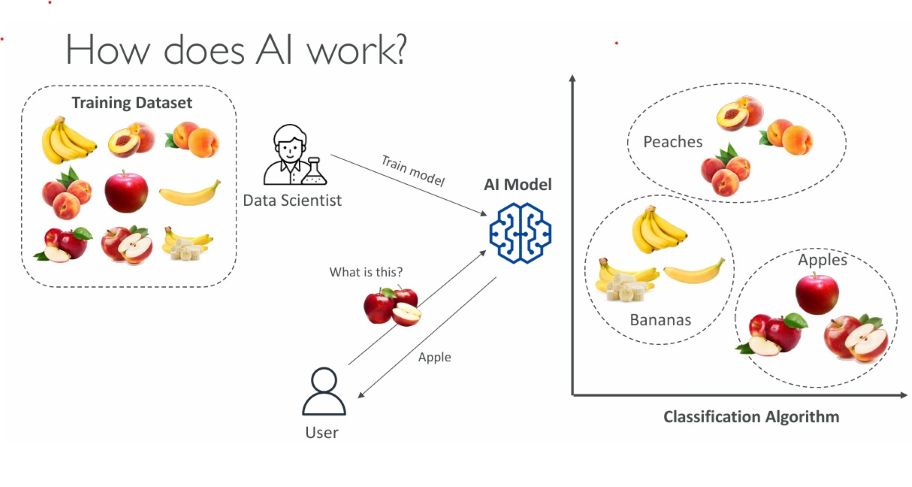

Artificial Intelligence (AI) is the science of creating machines that can perform tasks requiring human-like intelligence — such as perception, reasoning, language understanding, and decision-making. What began as symbolic rule-based systems has evolved into data-driven models powered by machine learning (ML), deep learning (DL), and generative AI.

2. Foundations of AI

The foundation of AI lies in algorithms, data, and computation. Data fuels training, algorithms define how learning occurs, and computational resources (like GPUs) enable large-scale model development. Early AI used logic and if–then rules, while modern AI relies on statistical learning and neural networks to extract patterns from massive datasets.

3. Machine Learning and Deep Learning

Machine Learning allows computers to learn patterns from data and improve over time without explicit programming. It includes supervised, unsupervised, and reinforcement learning approaches. Deep Learning, a subfield of ML, uses layered neural networks to model complex data relationships, transforming fields like computer vision and natural language processing.

4. Generative AI and LLMs

Generative AI creates new content — from realistic images to human-like text. Large Language Models (LLMs) such as GPT use transformer architectures and embeddings to understand and produce natural language. Prompt engineering and retrieval-augmented generation enhance their contextual accuracy and creativity.

5. Core Technologies

AI encompasses Natural Language Processing (NLP), Computer Vision, and Reinforcement Learning. Vector databases and embeddings have become vital for efficient data retrieval and contextual understanding, powering modern AI assistants and autonomous systems.

6. Training, Optimization, and Deployment

Training involves minimizing a loss function using methods like gradient descent. Models are validated to avoid overfitting and deployed via APIs or cloud platforms. Edge AI allows inference directly on devices, reducing latency and dependency on centralized servers.

7. Ethics, Fairness, and Explainability

Modern AI must address bias, privacy, and transparency challenges. Explainable AI (XAI) and Human-in-the-Loop systems promote accountability and trust. Responsible AI ensures fairness, inclusivity, and ethical innovation.

8. Applications Across Industries

AI drives transformation in healthcare, finance, manufacturing, and education. From predictive diagnostics and fraud detection to personalized learning and automation, AI enhances productivity, efficiency, and insight across sectors.

9. The Future of AI

The next generation of AI will focus on multimodal systems, hybrid reasoning, and autonomous agents capable of decision-making across domains. Federated learning will enhance privacy, and quantum computing may accelerate AI’s problem-solving capacity. The partnership between humans and AI will define innovation in the coming decades.

WorkInProgress- PS

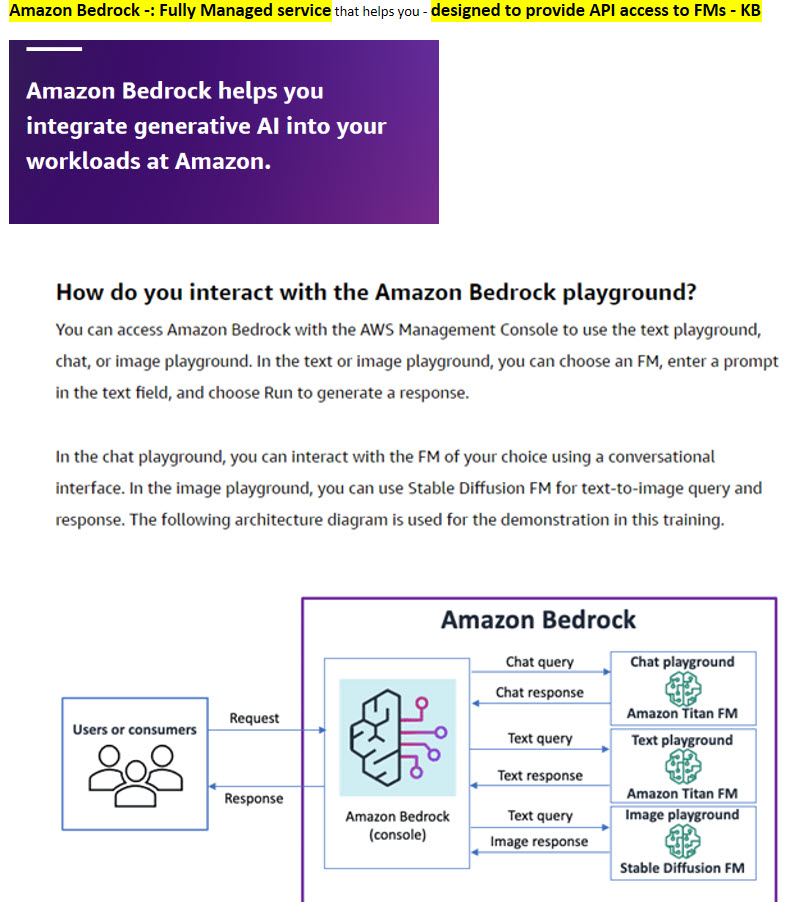

Bedrock - API Access to FMs

WorkInProgress- PS

How Doed it Work

WorkInProgress- PS

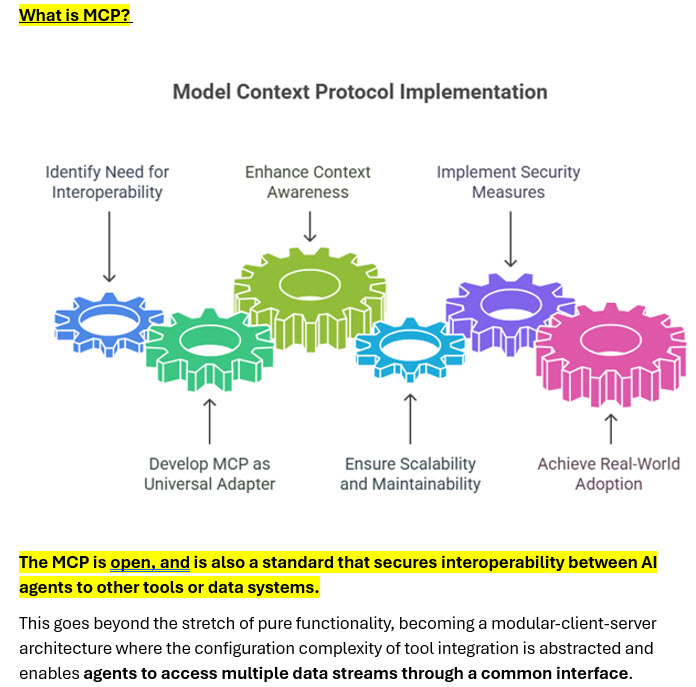

MCP